publications

2024

-

PROSPECT: Precision Robot Spectroscopy Exploration and Characterization ToolNathaniel Hanson*, Gary Lvov*, Vedant Rautela, and 4 more authorsIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024* Equal contribution

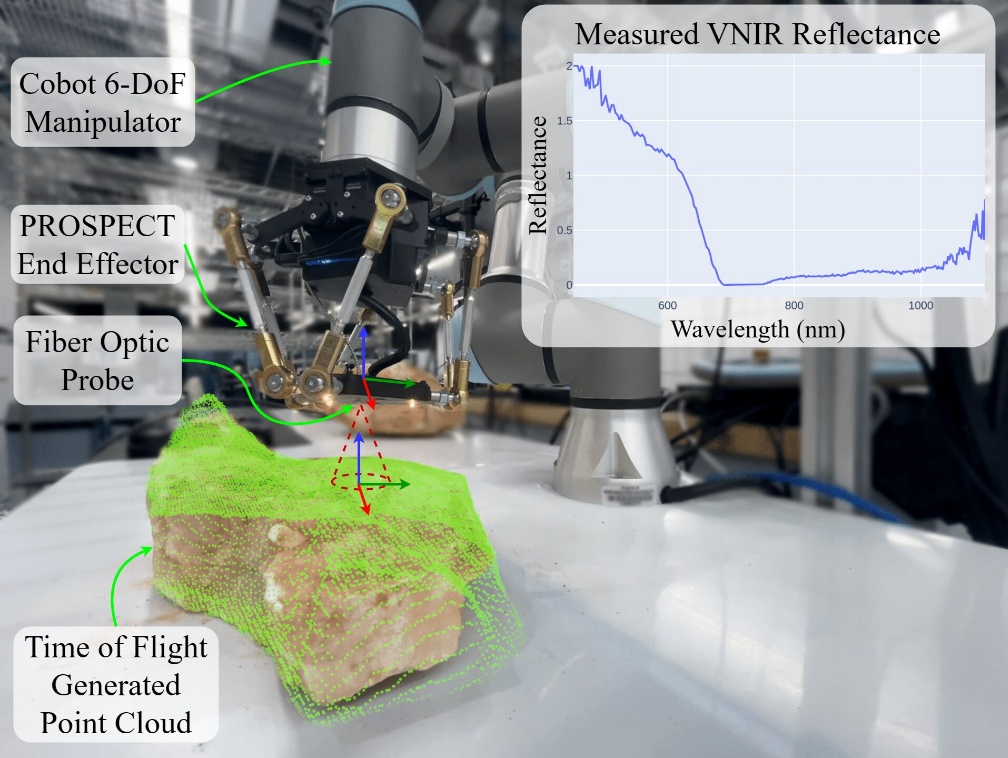

PROSPECT: Precision Robot Spectroscopy Exploration and Characterization ToolNathaniel Hanson*, Gary Lvov*, Vedant Rautela, and 4 more authorsIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024* Equal contributionNear Infrared (NIR) spectroscopy is widely used in industrial quality control and automation to test the purity and grade of items. In this research, we propose a novel sensorized end effector and acquisition strategy to capture spectral signatures from objects and register them with a 3D point cloud. Our methodology first takes a 3D scan of an object generated by a time-of-flight depth camera and decomposes the object into a series of planned viewpoints covering the surface. We generate motion plans for a robot manipulator and end-effector to visit these viewpoints while maintaining a fixed distance and surface normal. This process is enabled by the spherical motion of the end-effector and ensures maximal spectral signal quality. By continuously acquiring surface reflectance values as the end-effector scans the target object, the autonomous system develops a four-dimensional model of the target object: position in an R3 coordinate frame, and a reflectance vector denoting the associated spectral signature. We demonstrate this system in building spectral-spatial object profiles of increasingly complex geometries. We show the proposed system and spectral acquisition planning produce more consistent spectral signals than naïve point scanning strategies. Our work represents a significant step towards high-resolution spectral-spatial sensor fusion for automated quality assessment.

@inproceedings{10802210, author = {Hanson, Nathaniel and Lvov, Gary and Rautela, Vedant and Hibbard, Samuel and Holand, Ethan and DiMarzio, Charles and Padir, Taşkin}, note = {* Equal contribution}, booktitle = {2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, title = {PROSPECT: Precision Robot Spectroscopy Exploration and Characterization Tool}, year = {2024}, pages = {5244-5251}, keywords = {Reflectivity;Point cloud compression;Geometry;Spectroscopy;Three-dimensional displays;Robot kinematics;Robot sensing systems;End effectors;Planning;Surface treatment}, doi = {10.1109/IROS58592.2024.10802210}, }

2023

-

Mobile MoCap: Retroreflector Localization On-The-GoIn 2023 IEEE 19th International Conference on Automation Science and Engineering (CASE), 2023

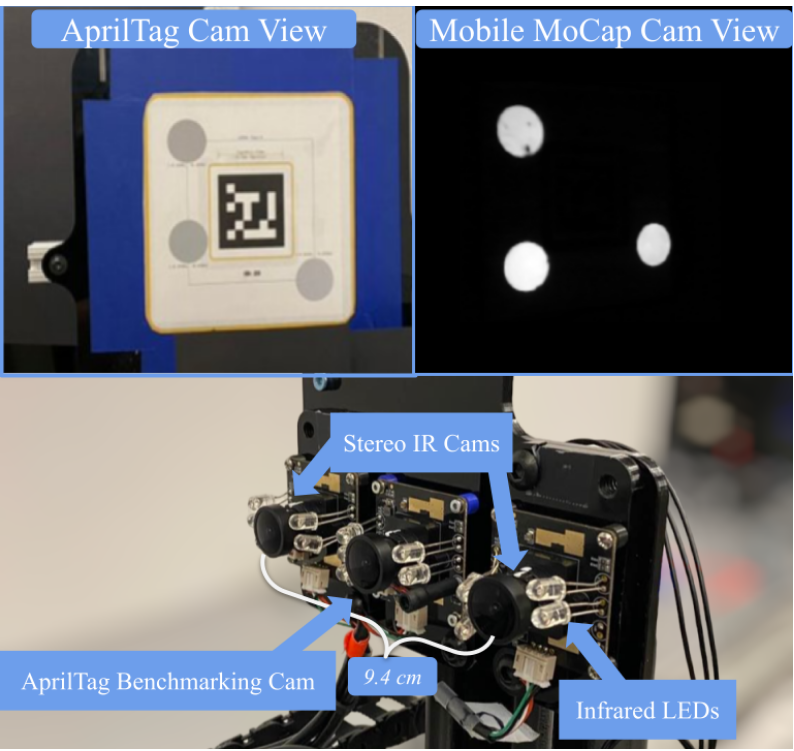

Mobile MoCap: Retroreflector Localization On-The-GoIn 2023 IEEE 19th International Conference on Automation Science and Engineering (CASE), 2023Motion capture through tracking retroreflectors obtains highly accurate pose estimation, which is frequently used in robotics. Unlike commercial motion capture systems, fiducial marker-based tracking methods, such as AprilTags, can perform relative localization without requiring a static camera setup. However, popular pose estimation methods based on fiducial markers have lower localization accuracy than commercial motion capture systems. We propose Mobile Mo-Cap, a system that utilizes inexpensive near-infrared cameras for accurate relative localization even while in motion. We present a retroreflector feature detector that performs 6- DoF (six degrees-of-freedom) tracking and operates with minimal camera exposure times to reduce motion blur. To evaluate the proposed localization technique while in motion, we mount our Mobile MoCap system, as well as an RGB camera to benchmark against fiducial markers, onto a precision-controlled linear rail and servo. The fiducial marker approach employs AprilTags, which are pervasively used for localization in robotics. We evaluate the two systems at varying distances, marker viewing angles, and relative velocities. Across all experimental conditions, our stereo-based Mobile MoCap system obtains higher position and orientation accuracy than the fiducial approach. The code for Mobile MoCap is implemented in ROS 2 and made publicly available at https//github.com/RIVeR-Lab/mobile_mocap

@inproceedings{10260562, author = {Lvov, Gary and Zolotas, Mark and Hanson, Nathaniel and Allison, Austin and Hubbard, Xavier and Carvajal, Michael and Padir, Taşkin}, booktitle = {2023 IEEE 19th International Conference on Automation Science and Engineering (CASE)}, title = {Mobile MoCap: Retroreflector Localization On-The-Go}, year = {2023}, volume = {}, number = {}, pages = {1-7}, keywords = {Location awareness;Tracking;Robot vision systems;Pose estimation;Systems architecture;Cameras;Motion capture}, doi = {10.1109/CASE56687.2023.10260562}, }

2022

-

Occluded object detection and exposure in cluttered environments with automated hyperspectral anomaly detectionNathaniel Hanson, Gary Lvov, and Taşkin PadirFrontiers in Robotics and AI, 2022

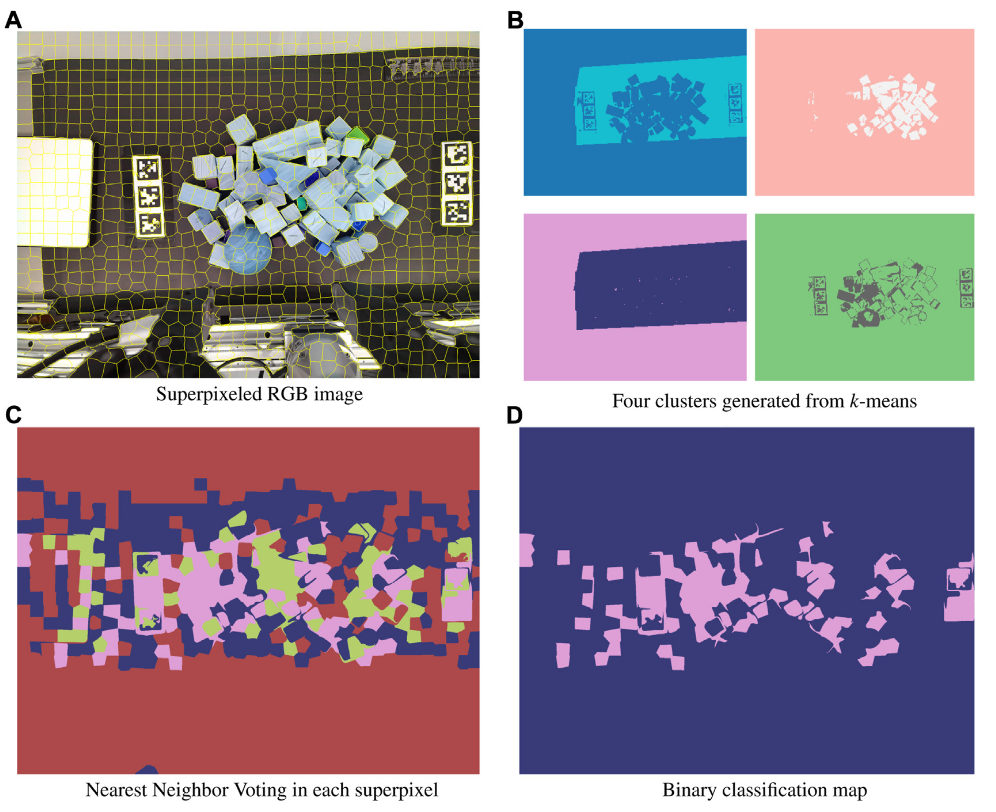

Occluded object detection and exposure in cluttered environments with automated hyperspectral anomaly detectionNathaniel Hanson, Gary Lvov, and Taşkin PadirFrontiers in Robotics and AI, 2022Cluttered environments with partial object occlusions pose significant challenges to robot manipulation. In settings composed of one dominant object type and various undesirable contaminants, occlusions make it difficult to both recognize and isolate undesirable objects. Spatial features alone are not always sufficiently distinct to reliably identify anomalies under multiple layers of clutter, with only a fractional part of the object exposed. We create a multi-modal data representation of cluttered object scenes pairing depth data with a registered hyperspectral data cube. Hyperspectral imaging provides pixel-wise Visible Near-Infrared (VNIR) reflectance spectral curves which are invariant in similar material types. Spectral reflectance data is grounded in the chemical-physical properties of an object, making spectral curves an excellent modality to differentiate inter-class material types. Our approach proposes a new automated method to perform hyperspectral anomaly detection in cluttered workspaces with the goal of improving robot manipulation. We first assume the dominance of a single material class, and coarsely identify the dominant, non-anomalous class. Next these labels are used to train an unsupervised autoencoder to identify anomalous pixels through reconstruction error. To tie our anomaly detection to robot actions, we then apply a set of heuristically-evaluated motion primitives to perturb and further expose local areas containing anomalies. The utility of this approach is demonstrated in numerous cluttered environments including organic and inorganic materials. In each of our four constructed scenarios, our proposed anomaly detection method is able to consistently increase the exposed surface area of anomalies. Our work advances robot perception for cluttered environments by incorporating multi-modal anomaly detection aided by hyperspectral sensing into detecting fractional object presence without need for laboriously curated labels.

@article{10.3389/frobt.2022.982131, author = {Hanson, Nathaniel and Lvov, Gary and Padir, Taşkin}, title = {Occluded object detection and exposure in cluttered environments with automated hyperspectral anomaly detection}, journal = {Frontiers in Robotics and AI}, volume = {9}, year = {2022}, url = {https://www.frontiersin.org/journals/robotics-and-ai/articles/10.3389/frobt.2022.982131}, doi = {10.3389/frobt.2022.982131}, issn = {2296-9144}, } -

Deep Reinforcement Learning based Robot Navigation in Dynamic Environments using Occupancy Values of Motion PrimitivesIn 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022

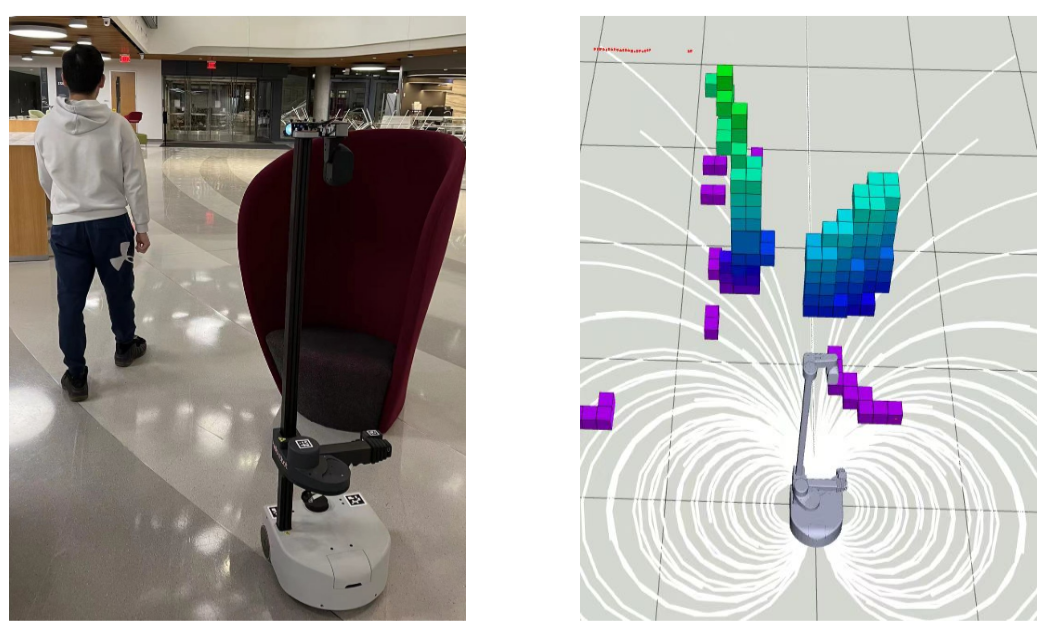

Deep Reinforcement Learning based Robot Navigation in Dynamic Environments using Occupancy Values of Motion PrimitivesIn 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022This paper presents a Deep Reinforcement Learning based navigation approach in which we define the occupancy observations as heuristic evaluations of motion primitives, rather than using raw sensor data. Our method enables fast mapping of the occupancy data, generated by multi-sensor fusion, into trajectory values in 3D workspace. The computationally efficient trajectory evaluation allows dense sampling of the action space. We utilize our occupancy observations in different data structures to analyze their effects on both training process and navigation performance. We train and test our methodology on two different robots within challenging physics-based simulation environments including static and dy-namic obstacles. We benchmark our occupancy representations with other conventional data structures from state-of-the-art methods. The trained navigation policies are also validated successfully with physical robots in dynamic environments. The results show that our method not only decreases the required training time but also improves the navigation performance as compared to other occupancy representations. The open-source implementation of our work and all related info are available at https://github.com/RIVeR-Lab/tentabot.

@inproceedings{9982133, author = {Akmandor, Neşet Ünver and Li, Hongyu and Lvov, Gary and Dusel, Eric and Padir, Taşkin}, booktitle = {2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, title = {Deep Reinforcement Learning based Robot Navigation in Dynamic Environments using Occupancy Values of Motion Primitives}, year = {2022}, volume = {}, number = {}, pages = {11687-11694}, keywords = {Training;Deep learning;Three-dimensional displays;Navigation;Neural networks;Reinforcement learning;Benchmark testing}, doi = {10.1109/IROS47612.2022.9982133}, }